The human brain is an intricate network of neurons, each firing signals and transmitting information in complex patterns that define our thoughts, actions, and experiences. Cognitive health is not just a matter of maintaining these neural networks; it’s also about refining and optimizing them. This brings us to a crucial but often overlooked aspect of neural functioning: Neural Backpropagation.

What if I told you that neurons don’t just send signals in one direction, but also have a feedback mechanism that allows them to ‘learn’ from their mistakes? Intriguing, right? Neural backpropagation is a fascinating process by which neurons can adjust their activities based on the accuracy of their output. In simpler terms, it’s a way for neurons to refine their communication, thereby contributing to learning and memory.

Contents

- The Basics of Neural Anatomy and Function

- What is Neural Backpropagation?

- How Neural Backpropagation Works

- Role of Neural Backpropagation in Learning and Memory

- Neural Backpropagation Implications for Cognitive Health

- Neural Backpropagation in Artificial Neural Networks

- References

The Basics of Neural Anatomy and Function

Before we dive into the world of neural backpropagation, it’s important to establish a foundational understanding of neurons and how they generally function. This will allow us to appreciate the nuanced ways neurons can adjust and refine their signaling, which is at the heart of neural backpropagation.

Structure of a Neuron

Neurons are the fundamental units of the brain, responsible for receiving, processing, and transmitting information. Understanding their structure is crucial to comprehending how they function and, eventually, how they can refine their activities.

Cell Body

The cell body, also known as the soma, is the central hub of the neuron. It contains the nucleus and is responsible for the neuron’s basic metabolic functions. It’s from here that the rest of the neuron extends.

Dendrites

Dendrites are branch-like structures that protrude from the cell body. Their primary role is to receive information from other neurons and transmit it towards the cell body. Think of them as the ‘antennae’ of the neuron, always ready to pick up signals.

Axon

The axon is a long, slender projection that extends from the cell body. It’s like a transmission line, conducting electrical impulses away from the cell body towards other neurons or target cells. Some axons are covered in a myelin sheath, which helps to speed up signal transmission.

Synaptic Connections

Having covered the basic structure of a neuron, let’s explore how neurons communicate with each other. This interaction occurs at specialized junctions known as synapses [1].

Synapse

A synapse is the microscopic gap between the axon of one neuron and the dendrites or cell body of another neuron. This is where the magic happens—where neurotransmitters are released to relay messages from one neuron to the next.

Neurotransmitters

Neurotransmitters are chemical messengers that transmit signals across the synaptic gap. When an action potential (or nerve impulse) reaches the end of an axon, it triggers the release of neurotransmitters. These chemicals then bind to receptors on the receiving neuron, either exciting or inhibiting it.

Firing of a Neuron

Now that we understand the structure and synaptic connections, it’s time to discuss what happens when a neuron actually fires.

Action Potential

An action potential is an electrical signal that travels along the axon. Generated in the axon hillock—a region near the cell body—the action potential is the result of a precise sequence of electrical events. When a certain threshold is reached, the neuron fires, sending this electrical signal down its axon.

All-or-None Principle

Neurons operate on an “all-or-none” principle. This means that a neuron either fires with a full-strength signal or doesn’t fire at all. The strength or intensity of the stimulus doesn’t affect the strength of the action potential; instead, it may affect the frequency of firing.

Neural Circuits and Networks

Individual neurons are impressive, but their true power lies in their collective networking.

Neurons don’t operate in isolation; they are part of intricate networks and circuits that enable complex processes like thought, emotion, and action. These networks can be simple, involving only a few neurons, or incredibly complex, involving millions. By working in conjunction, neurons can process information more efficiently and enable complex cognitive functions.

What is Neural Backpropagation?

The concept of neural backpropagation takes our understanding of neurons to the next level, introducing the idea that neurons can actually “learn” from their outputs to improve future performance.

Definition and Basic Description of Neural Backpropagation

Neural backpropagation, often simply referred to as “backpropagation,” is a process through which neurons adjust their internal parameters based on the accuracy or error of their output. Imagine a scenario where you are learning to play a musical instrument. At first, you may hit some wrong notes, but as you practice, your brain refines the motor skills needed to produce the correct sound. This is analogous to backpropagation in neurons: they “learn” from past activities and adjust their parameters to improve future outputs [2].

Historical Background of Neural Backpropagation

The concept of neural backpropagation is not new; it has its roots in both biological studies and computational models of neural networks. It was first popularized in the 1980s as a learning algorithm for artificial neural networks, which were designed to mimic biological neural systems. However, the idea that neurons might have some sort of ‘backward’ flow of information to refine their operations has been speculated upon by neuroscientists for several decades. Although the term “backpropagation” was initially more prevalent in the realm of computer science, it has gained traction in neuroscience as researchers have explored its biological plausibility.

How Neural Backpropagation Differs from Standard Neural Transmission

Standard neural transmission generally follows a ‘feedforward’ mechanism, where signals are propagated from the dendrites to the cell body and then along the axon, ultimately leading to the release of neurotransmitters at the synapse. The focus is usually on the ‘forward’ flow of information.

In contrast, backpropagation introduces a ‘feedback’ mechanism. This process involves an ‘error signal’ being sent in the reverse direction, from the output end back toward the input, enabling the neuron to adjust its parameters. Essentially, backpropagation allows neurons to make more accurate future predictions or actions by learning from the errors in their past outputs.

How Neural Backpropagation Works

Now that we’ve defined what neural backpropagation is and how it differs from standard neural transmission, the natural next step is to explore the mechanisms that make it work. Understanding these intricacies will help us appreciate the role of backpropagation in learning and memory, and its implications for cognitive health.

Theory Behind Backpropagation

The theoretical framework of neural backpropagation is rooted in the idea of error minimization and weight adjustment. The idea is that neurons, or artificial neural networks designed to mimic them, can improve their performance by adjusting how much importance (weight) they give to incoming signals based on the ‘error’ in their output.

Error Minimization

In backpropagation, ‘error’ refers to the difference between the actual output and the desired output, or target. Imagine a neuron that’s part of a neural circuit involved in muscle coordination. If the circuit’s output results in a clumsy movement, the ‘error’ would be the degree to which the movement deviates from the intended, coordinated motion. The idea is to minimize this error over time.

Weight Adjustments

The concept of ‘weights’ in neural parlance refers to the strength or influence of connections between neurons. In the context of backpropagation, these weights are adjusted based on the calculated error. If a particular input contributes significantly to the error, the weight of that connection will be adjusted so that it has less influence in future computations.

The Mathematical Framework

Though the biological details are complex, the process can be framed mathematically, which has been particularly useful in the realm of artificial neural networks [3].

Activation Functions

Activation functions determine how a neuron processes its inputs and produces an output. In artificial neural networks, popular activation functions include the sigmoid and ReLU (Rectified Linear Unit) functions. These functions are also believed to have biological counterparts that define how real neurons activate.

Loss Functions

Loss functions are mathematical equations used to quantify the ‘error’ in the system. The aim is to minimize the value produced by the loss function, thereby reducing the difference between the actual and desired outputs. Common loss functions include Mean Squared Error and Cross-Entropy.

Biological Plausibility of Backpropagation

A point of contention in neuroscience is whether backpropagation is a biologically plausible mechanism for learning in real neurons.

Arguments for Plausibility

Some researchers argue that similar processes occur in biological systems. For instance, there are known feedback mechanisms in the brain where higher-order regions send signals back to lower-order regions. This could be seen as a form of backpropagation, where the system adjusts based on output error.

Arguments Against Plausibility

However, skeptics point out that the precise, mathematical nature of backpropagation algorithms in artificial neural networks may not be fully applicable to biological neurons. Biological systems are noisy, messy, and operate under constraints that are far different from computational models.

Role of Neural Backpropagation in Learning and Memory

We’ve explored the structure and function of neurons, looked into the concept of neural backpropagation, and examined the mechanisms that make it work. But what are the practical implications of this fascinating process? Particularly, how does neural backpropagation contribute to learning and memory, two key aspects of cognitive health?

Learning Processes

Learning involves the acquisition of new knowledge, skills, or behaviors. It requires the ability to adjust and refine one’s responses based on experience. Let’s explore how neural backpropagation can enhance this ability.

Adaptive Learning

In adaptive learning, the brain modifies its behavior based on the outcomes of previous actions. Neural backpropagation plays a crucial role here. By adjusting the weights of synaptic connections based on errors in output, the neural network can make more accurate predictions or decisions in future similar situations.

Reinforcement Learning

In reinforcement learning, rewards and punishments guide behavior. Neural backpropagation aids in optimizing the neural pathways that lead to rewards, thereby reinforcing specific behaviors. For example, if taking a certain action leads to a positive outcome, the neurons involved in that decision-making process may adjust their parameters to favor that action in future situations [4].

Memory Retention and Recall

Memory is not a static entity; it’s a dynamic process that involves encoding, storage, and retrieval of information. The role of backpropagation in this process is still a subject of research, but several interesting connections can be made.

Strengthening Neural Pathways

Memories are believed to be stored as patterns of synaptic connections. Backpropagation can contribute to memory by fine-tuning these connections based on experience, thereby strengthening the neural pathways responsible for specific memories.

Enhancing Recall Accuracy

The accuracy of memory retrieval can be improved through backpropagation. By continually adjusting synaptic weights based on errors or inconsistencies in recalled information, the neural networks involved in memory can improve the accuracy of future recall.

Applications in Education and Training

Understanding the role of neural backpropagation in learning and memory isn’t just academically interesting; it has practical applications in education and training.

Personalized Learning

With insights into how neural backpropagation works, educational programs could potentially be tailored to align with the natural learning processes of the brain, making them more effective.

Skill Acquisition and Mastery

In professions that require specialized skills or high levels of expertise, understanding neural backpropagation can inform training programs designed to accelerate skill acquisition and promote mastery.

Neural Backpropagation Implications for Cognitive Health

As we move from the theoretical to the practical, the implications of neural backpropagation for cognitive health come into sharper focus. Cognitive health is an expansive field that covers everything from memory and attention to problem-solving and decision-making. With the role of backpropagation in learning and memory now clearer, let’s turn our attention to its broader implications in maintaining cognitive health and potentially addressing cognitive disorders.

Potential for Cognitive Enhancement

Neural backpropagation offers exciting possibilities for the enhancement of cognitive abilities. Here’s how this process might contribute to improved cognitive performance [5].

Improving Cognitive Flexibility

Cognitive flexibility refers to our ability to adapt and shift our thinking in response to changing circumstances. Through backpropagation, neurons could potentially refine their connections to improve this adaptability, thereby enhancing our problem-solving and coping skills.

Enhancing Attentional Control

Backpropagation could also play a role in how effectively we can focus our attention and ignore distractions. By fine-tuning neural networks associated with attention, it may be possible to improve this crucial cognitive function.

Therapeutic Implications

The understanding of neural backpropagation might not just be useful for cognitive enhancement but could also have therapeutic applications.

Addressing Learning Disabilities

Individuals with learning disabilities often struggle with the efficient formation and retrieval of memories or the acquisition of new skills. Research into backpropagation could inform therapeutic interventions aimed at improving these neural processes, thus aiding in the treatment of such disorders.

Neurodegenerative Diseases

In conditions like Alzheimer’s disease, where neural connections degrade over time, insights into backpropagation could potentially guide treatments aimed at slowing this degeneration or enhancing neural plasticity.

Ethical Considerations

While the potential benefits are enticing, it’s crucial to consider the ethical dimensions that come into play.

Ethical Use of Cognitive Enhancement

The potential to enhance cognitive abilities through an understanding of backpropagation raises ethical questions about access, consent, and the potential for misuse. Who gets to benefit from such advancements, and what are the potential societal implications?

Privacy and Autonomy

If understanding backpropagation leads to technologies that can modify neural pathways, ethical considerations around privacy and individual autonomy become paramount. The ability to influence human cognition at the neural level could be a double-edged sword, with potential for both great benefit and misuse.

Neural Backpropagation in Artificial Neural Networks

We’ve looked at neural backpropagation through the lens of biology, exploring its significance in learning, memory, and cognitive health. But this concept is not confined to the realm of neuroscience; it has also made a tremendous impact in the field of artificial intelligence, particularly in the training of artificial neural networks.

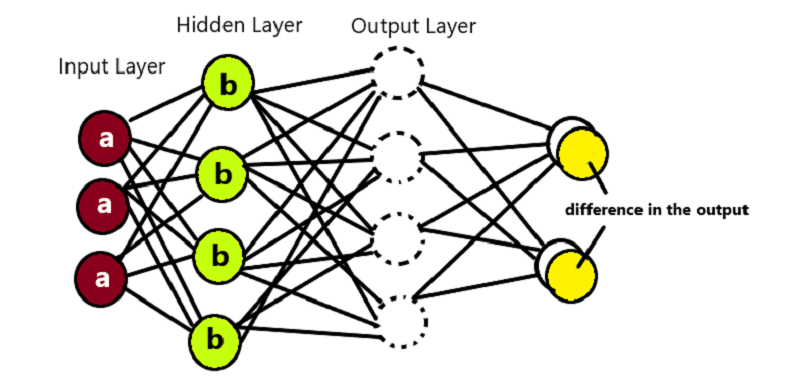

Basics of Artificial Neural Networks

Before diving into backpropagation’s role in artificial neural networks, let’s establish some fundamentals about these networks themselves.

What Are Artificial Neural Networks?

Artificial neural networks (ANNs) are computing systems inspired by the structure and function of biological neural networks. They consist of interconnected nodes or ‘artificial neurons’ that process information in a manner akin to biological neurons.

Layers in an Artificial Neural Network

Typically, ANNs consist of three types of layers: the input layer, hidden layers, and the output layer. Data flows from the input to the output layer, passing through hidden layers where computations take place. These layers enable the network to capture complex patterns and relationships in data.

Role of Backpropagation in Training ANNs

Backpropagation is a cornerstone algorithm used for training artificial neural networks. Here’s how it contributes to the learning process.

Error Calculation and Propagation

In ANNs, backpropagation works by calculating the error at the output and propagating this error information back through the network. This helps adjust the weights of connections between artificial neurons, much like how we discussed backpropagation affecting synaptic weights in biological neurons.

Optimization Techniques

The backpropagation algorithm is often paired with optimization techniques like stochastic gradient descent to update the weights in a manner that minimizes the error. The end goal is to train the network to make accurate predictions or classifications.

Applications of ANNs with Backpropagation

Artificial Neural Networks trained using backpropagation are used in various applications that impact our daily lives.

Natural Language Processing

One application is in natural language processing, which powers conversational agents and translation services. Backpropagation aids in fine-tuning the network to understand and generate human language more accurately.

Image Recognition

Another area is image recognition, where ANNs can identify objects or even diagnose medical conditions from X-rays or MRI scans. Backpropagation ensures that the network learns from its mistakes, enhancing its performance over time.

Bridging Biology and Technology

The success of backpropagation in artificial neural networks often fuels curiosity about its role in biological neural networks, and vice versa.

Insights into Human Learning

The algorithms used in ANNs can offer insights into how learning might occur in biological systems. This cross-disciplinary understanding could be invaluable in both improving machine learning algorithms and understanding biological cognition.

Ethical and Philosophical Questions

As artificial neural networks become more sophisticated, ethical questions similar to those we discussed in the context of cognitive health also come into play. The boundaries between biological and artificial intelligence become increasingly blurred, raising important ethical and philosophical questions about the nature of intelligence, consciousness, and agency.

References

[1] How Does Backpropagation in a Neural Network Work?

[2] Can the Brain Do Backpropagation?

[3] Neural backpropagation

[4] Backpropagation and the brain

[5] How Does Back-Propagation Work in Neural Networks?